Green Data Centers – How to Choose an Eco-Friendly IT Partner?

A Guide for Companies Looking for IT Providers Using Energy-Efficient Infrastructure and Renewable Energy

Author:

Author:We would like to present the last part of our Docker series, where we will talk about the differences between ARG and ENV, docker-compose, orchestration, and Docker API. If you are just starting your Docker software journey, we encourage you to familiarize yourself with the previous parts (#1, #2 and #3), so that your knowledge is built on a solid foundation.

ARG vs ENV

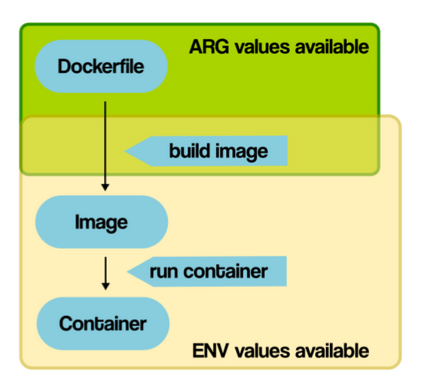

In the previous articles, we mentioned environment variables, which can affect the operation of the deployed application, and can be provided to the container through a command or .env file. However, these are not the only ways. It is important to note that the application can be affected by both ENV environment variables and provided ARG arguments. The difference lies in when such variables are provided – with ENV, it is during the container’s launch, whereas with ARG, it is during the image building process. The following illustration illustrates this well:

Figure 1. The Scope of ARG and ENV (vsupalov.com).

From the CMD level, a command using ARG may look like this:

docker build –build-arg MY_ARG=custom_value -t myimage .

Meanwhile, using ENV looks like this:

docker run –env MY_VAR=hello alpine

or like this:

docker run –env-file env_file alpine

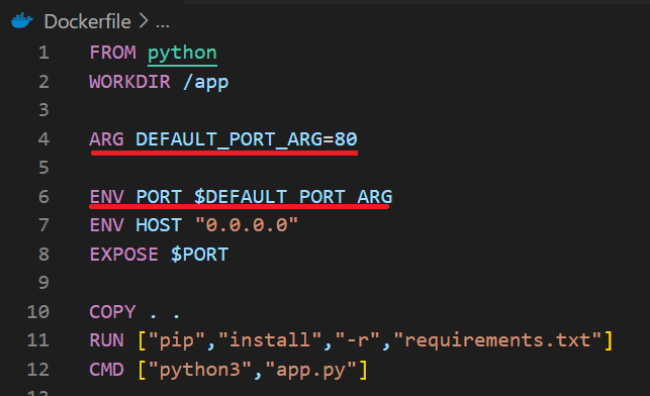

Both of these concepts can be mixed when building an image from the Dockerfile level.

Figure 2. Example Dockerfile Using ARG and ENV.

In the above illustration, it can be seen that ARG has a default value of 80, which can be overridden using the –build-args switch during building, or when running the container using –env / –env-file.

Docker Compose

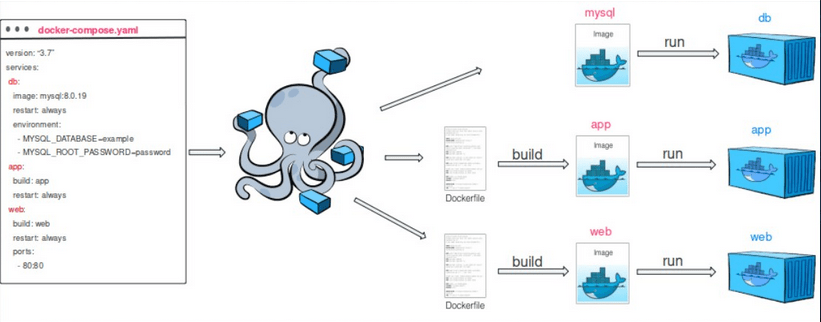

Docker has a very important mechanism called docker-compose, which refers to both the docker-compose/docker compose command and the docker-compose.yml file name. Due to the way applications are constructed today (such as microservices) and the business requirements for repeatability and reliability of configuration and software, the docker-compose tool was created. It allows for the preparation of the entire configuration of the containerized environment (applications, databases, reverse proxy), which can then be repeatedly deployed using a single command:

docker-compose up

Figure 3. Visualization of docker-compose operation. Source: microsoft.com.

In earlier versions of Docker, the docker-compose command was used to manage multi-container applications. However, starting with Docker 1.27, the docker compose command was introduced as a replacement for the docker-compose command. This change was made to conform to the naming conventions of other commands and provide a more consistent user environment.

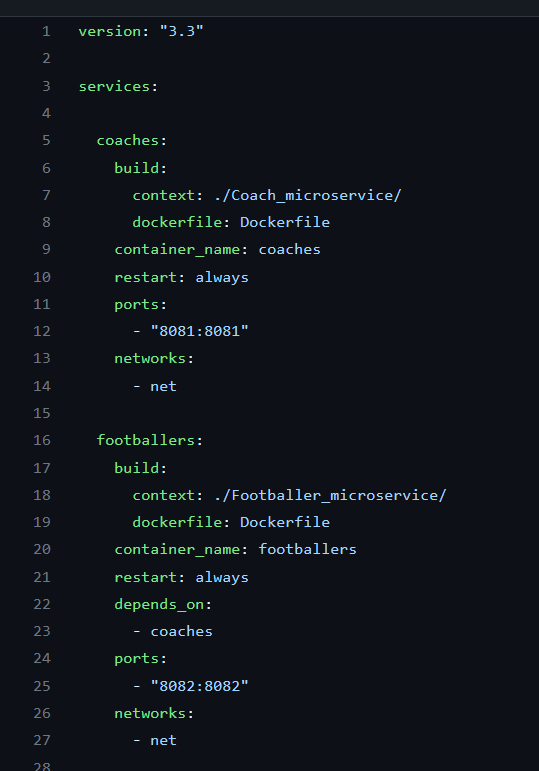

Figure 4. Example contents of the docker-compose.yml file.

The basic directives present in the docker-compose.yml file are:

Networking in containers

Different types of communication are used depending on the requirements. Some do not require any configuration, while others require Docker network configuration. The following types of communication can be distinguished in Docker:

Let’s start with the possibility of a container communicating outside. By default, each container has access to the Internet if the host system has such access. Therefore, we can query any publicly available API in the Internet without any additional configuration from inside the container.

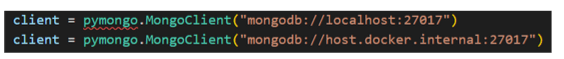

The second option is communication from inside the container to the host. If we want to refer to a locally running database on the host system, we must use “host.docker.internal” instead of “localhost” in the address. It is worth mentioning that the database should also be containerized. Such a setup with a host and container is not a good production solution.

Figure 5. Example of using host.docker.internal.

The third and most commonly used option is communication between two containers through a network created in Docker. This setup is probably the most commonly used and is also considered best practice, where each service runs in a separate container, such as a database and an application, or a microservices-based application. If we want to communicate between two containers, we add them to the same network (docker run –network). In the code, we refer to the second container by its name (using the IP address is not recommended).

![]()

Figure 6. Assigning the my_mongo_container container as the MongoDB server.

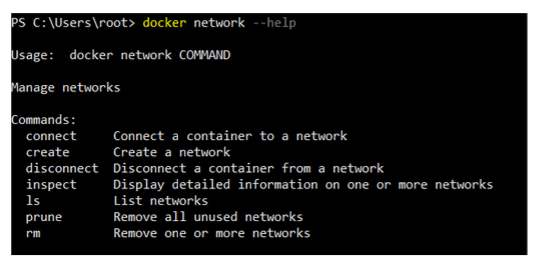

The docker network create command can be used to create a new network, which can then be used in the docker run command or in a compose file. It is worth noting that a network can also be created directly from the compose file.

Figure 7. Possibilities of docker network command.

Orchestration tools

Orchestration in the Docker environment refers to the process of automating, scaling, and managing Docker containers. It enables easy deployment of applications in containers, running them in a distributed environment. The most popular orchestration tools are Docker Swarm and Kubernetes, and although both serve a similar purpose, they have different architectures and sets of functions.

Docker Swarm is a native clustering and orchestration tool that is designed to be easy to use and is a good choice for smaller deployments or organizations that already use Docker. On the other hand, Kubernetes is a container orchestration system that provides a powerful API interface for managing applications in containers and includes features such as automatic scaling and load balancing. Kubernetes is designed to be highly scalable and resilient to failures. It is a better choice for larger deployments or organizations that require a high degree of automation and control over containerized applications.

Docker API

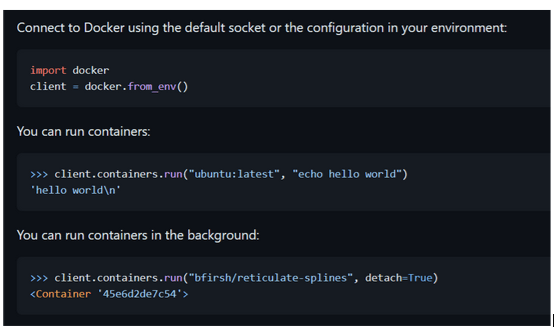

It is also worth noting that the CLI is not the only way to communicate with Docker, as Docker has a REST API that allows communication through programming language calls. This allows for even greater optimization of service management and automation of administrator actions.

Figure 8. Dockerpy library.

Summary

This is the final part of our adventures with Docker. We hope that our series has helped you expand your knowledge.

Sources:

https://vsupalov.com/docker-arg-vs-env/

https://github.com/docker/docker-py

Green Data Centers – How to Choose an Eco-Friendly IT Partner?

A Guide for Companies Looking for IT Providers Using Energy-Efficient Infrastructure and Renewable Energy

Green IT

Helm for the Second Time – Versioning and Rollbacks for Your Application

We describe how to perform an update and rollback in Helm, how to flexibly overwrite values, and discover what templates are and how they work.

AdministrationInnovation

Helm – How to Simplify Kubernetes Management?

It's worth knowing! What is Helm, how to use it, and how does it make using a Kubernetes cluster easier?

AdministrationInnovation