Responsible Software Development: How to Reduce Your Application’s Carbon Footprint?

Practical tips on how developers can actively reduce CO2 emissions by optimizing code, infrastructure, and application architecture.

Author:

Author:In this article, we will tell you about the InfiniBand and RDMA technologies that have increased the throughput in interconnect systems in computational clusters and reduced latency. This results in improved performance of supercomputers and other systems, eliminating bottlenecks. Interested? Let’s dive in!

What is InfiniBand?

InfiniBand is a fast networking technology used to connect servers, storage devices, and other network resources in data centers and high-performance computing (HPC) environments. It was designed to provide low-latency, high-throughput connectivity and is widely used in HPC systems and supercomputers. InfiniBand utilizes a different protocol stack compared to traditional TCP/IP networks. In InfiniBand, there are several concepts:

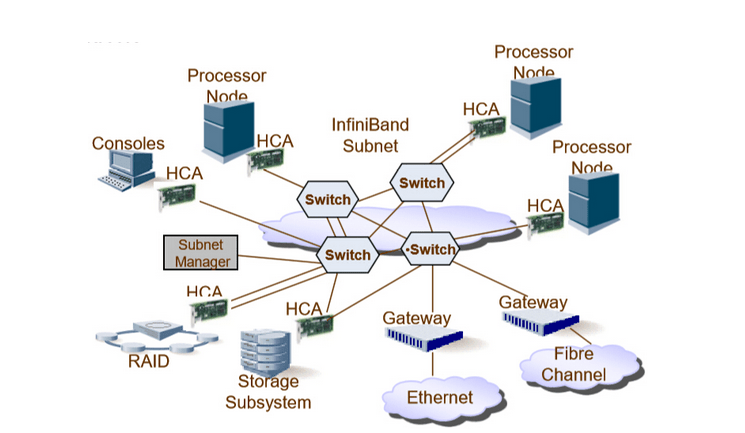

Figure 1: An example architecture of an InfiniBand network with data processing nodes and storage. Source: jcf94.com.

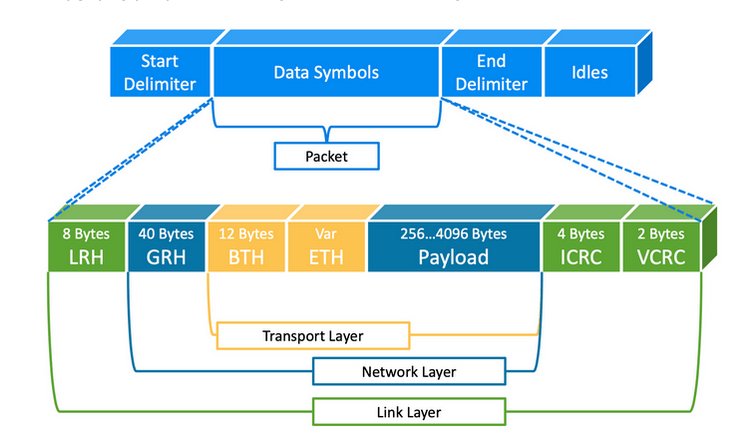

It’s worth noting that due to the different protocols used, frames in InfiniBand networks have a different structure than in standard Ethernet networks.

Figure 2: We have completely different headers in InfiniBand compared to Ethernet, which are associated with identifiers unique to the InfiniBand network. Source: ACM-Study-Group.

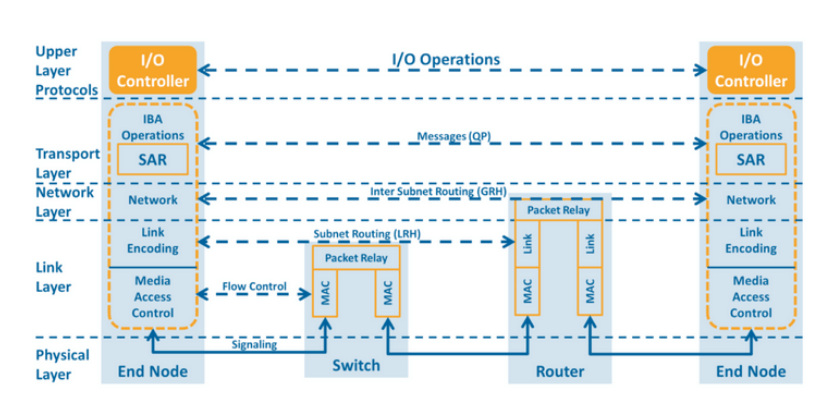

The network protocol stack can be visualized within a 4-layer network model as follows:

Figure 3: InfiniBand network model. Source: ACM-Study-Group.

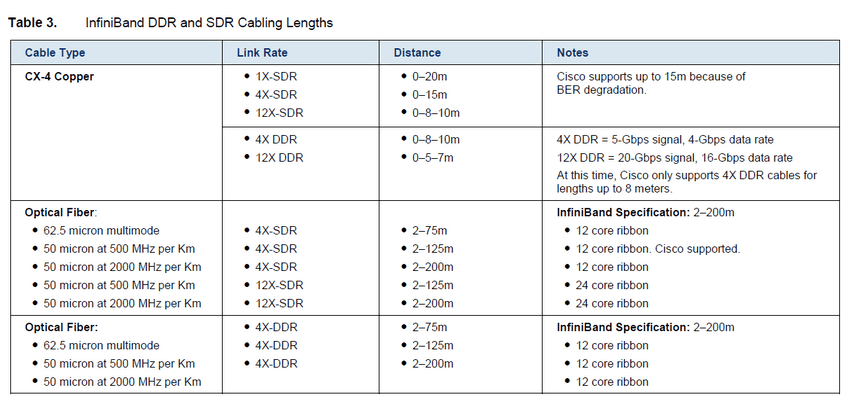

InfiniBand Cabling

Cabling standards for InfiniBand are defined for both copper and fiber optic cables. What’s most interesting is how stringent the norms are when it comes to building such infrastructure. For fiber optic cables, the maximum distance is limited to 200 meters. Why? Longer fiber optic cables introduce delays and become a bottleneck for the entire system.

Figure 4: InfiniBand cabling standards.

Additionally, it’s worth mentioning that many other standards closely collaborate with InfiniBand and are capable of utilizing such a network in one way or another. For example:

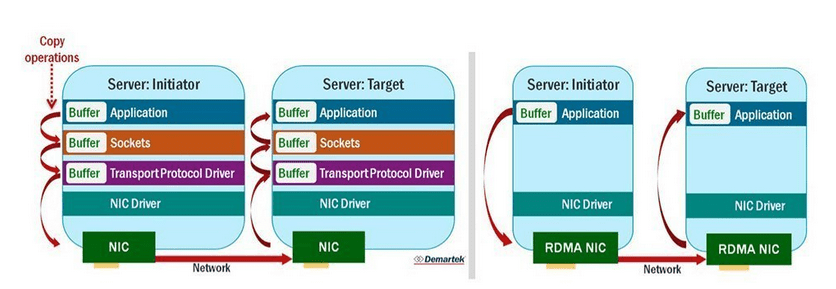

DMA vs RDMA

One of the most significant elements of networks for supercomputers and other high-speed systems is the elimination of bottlenecks resulting from technological limitations. One such element was the problem of involving the processor in network communication and the issue of the network stack. Thanks to RDMA, we are able to achieve really low latencies at the microsecond level.

DMA (Direct Memory Access) is a technology that allows data to be transferred between a device and memory without processor intervention. DMA improves system performance by offloading the processor and allowing it to perform other tasks while data is being transferred.

Remote Direct Memory Access (RDMA) is access to the memory of one computer from another in a network without involving the operating system, processor, and cache memory. It improves the throughput and performance of systems. Operations such as read and write can be performed on a remote machine without interrupting the processor’s work on that machine. This technology helps in faster data transmission in networks where low latencies are critical.

An integral part of RDMA is the concept of zero-copy networking, which allows reading data directly from the main memory of one computer and writing that data directly to the main memory of another computer. RDMA data transfers bypass the kernel network stack on both computers, improving network performance. As a result, communication between two systems will end much faster than comparable non-RDMA network systems.

Figure 5: Comparison of traditional network adapters with RDMA-enabled ones.

According to research (WangJangChenYiCui’17), the weakest RDMA implementation has 10 times lower latency than the best non-RDMA implementation. For InfiniBand, this latency is 1.7 microseconds. That’s why, as mentioned earlier, fiber optic cables are designed to have a maximum distance of 200 meters, as it eliminates the potential bottleneck in the fiber optic cable itself (fiber optic delay is around 5 microseconds per kilometer).

Delay Calculator in Fiber Optic:

https://www.timbercon.com/resources/calculators/time-delay-of-light-in-fiber-calculator/

Ethernet vs. HPC

Ethernet is a widely used network technology that is also utilized in some high-performance computing (HPC) environments. In the past, Ethernet was not considered a suitable interconnect technology for HPC due to its high latencies and lower throughput compared to other HPC interconnects like InfiniBand. However, with the development of new Ethernet standards and technologies such as RDMA over Converged Ethernet (RoCE), Ethernet is becoming a cost-effective option for certain HPC workloads. RoCE enables low-latency, high-throughput data transfer between servers and storage devices, making it a promising option for HPC clusters requiring high-speed interconnects.

Summary

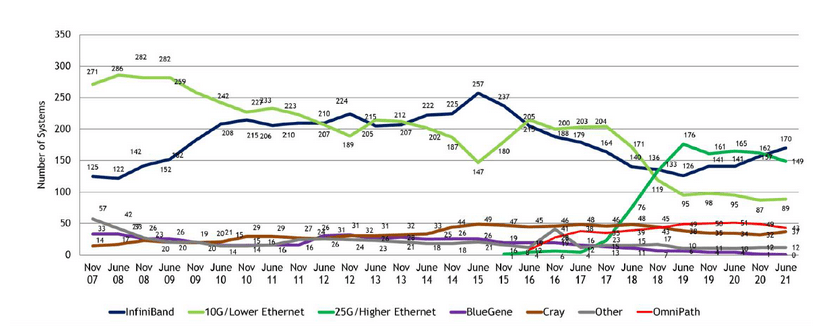

There are many technologies that contribute to progressively increasing throughput and reducing latency or costs. Scientists are conducting research on multiple fronts, while businesses seek cost-effective solutions for supercomputers. It’s worth mentioning statistics that show the most commonly used technologies in high-performance systems.

Figure 6: Technological trends for the top 500 supercomputers, source: Nvidia.

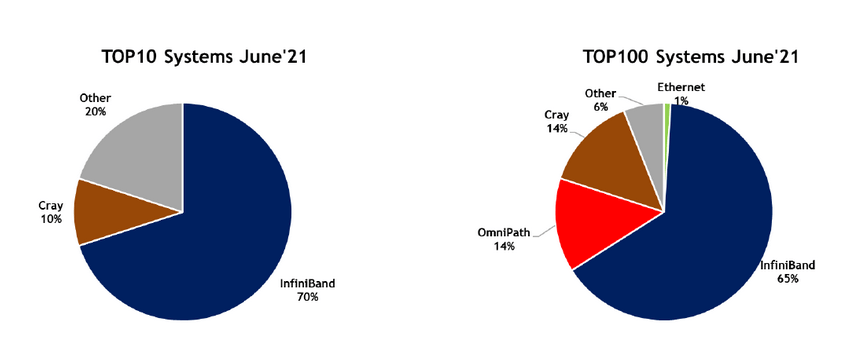

In recent years, there has been a significant dominance of two technologies described in this article – InfiniBand and Ethernet.

Figure 7: Percentage share of different interconnect technologies in the top 10 and top 100 systems, source: Nvidia.

Despite the visible growth in the significance of Ethernet in recent years, it has virtually no presence in the top 100 ranking, where InfiniBand dominates. We encourage you to explore the list where specific supercomputers have their parameters and interconnect systems presented. https://www.top500.org/lists/top500/list/2022/11/

We hope that this article has given you a nugget of substantive knowledge on the subject of supercomputers and the connections used in these systems. It is a broad topic in which large companies invest due to its wide range of applications.

Sources:

Responsible Software Development: How to Reduce Your Application’s Carbon Footprint?

Practical tips on how developers can actively reduce CO2 emissions by optimizing code, infrastructure, and application architecture.

Green ITInnovation

Green IT: How Technology Can Support Environmental Protection?

An introduction to the idea of Green IT – a strategy that combines technology with care for the planet.

Green ITInnovation

CI/CD + Terraform – How to Deploy Your Application on AWS? – Part 2

Learn about the possibilities of GitHub Actions - how to quickly deploy an application using GitHub Actions using AWS and Terraform technologies.

AdministrationProgramming